“Honor thy error as a hidden intention”

– Brian Eno and Peter Schmidt, The Oblique Strategies

This is the first real code I have written since I was a high school freshman. I was pretty overwhelmed playing around with P5 in class, but I got home and decided to keep exploring. And suddenly little tiny bits and pieces of half-remembered stuff came floating back – first Booleans (because who can forget a word like “Boolean”?), then “if/then,” then “else if”… and I was off to the races. I wrote a very very silly program and was deeply impressed with what I had discovered I could do.

And then it came time to Do the Actual Work, and I realized I had no idea. It’s one thing to gather up party tricks and various ways of making the screen do what you want it to, but quite another to use those techniques to tell a story, even a very simple one.

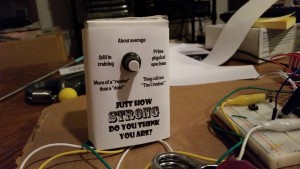

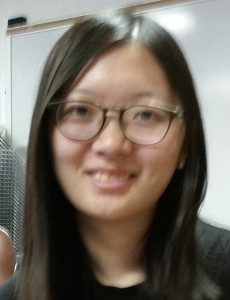

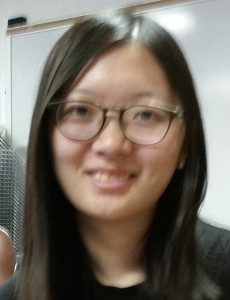

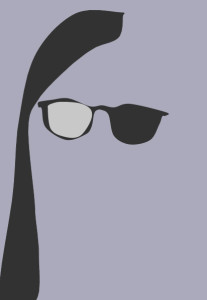

I looked through all of the available functions to see which one would give the most flexibility and ease of use, and I hit on the idea that I could use curve() like the pen tool in Photoshop, if only I could plot coordinates. So I opened my sample photo:

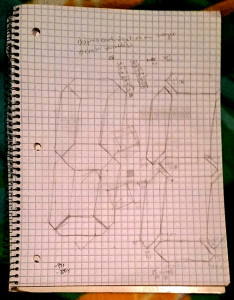

sized it to the canvas I wanted to use, and began to move the mouse to where I would normally put anchor points in Photoshop, and noted the values for x and y on a piece of paper.

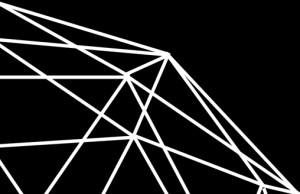

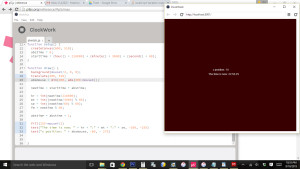

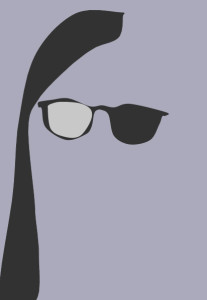

I then entered them into P5 (using curveVertex()), and a picture began to emerge:

but not a very compelling one. It’s amazing how difficult it is to draw by conjecture and guesswork, using only numbers and no visual interface. Even with a set of likely points, I was getting weird, lumpy, incoherent shapes, and had absolutely no meaningful way of resolving them.

I then had a think and reread the assignment and decided that it might be more edifying to try to use the actual shapes in the program. It was still painfully slow going, but I developed a process where I decided everything (save a couple choice elements) would be simplified and symmetrical, that I would make the left feature first, “perfect” it by modifying axes, curves, etc., then copy it to the right. In looking for a way to rotate shapes I came across the translate() function, which helped immensely as I could put the center of the face at the center of my coordinate system and easily just change negatives to positives to copy elements from left to right.

But it was still mighty slow going, all trial and error and moving things five pixels at a time. It’s also amazing how resonant the idea of a face is, and the effect of any element being “off.”

But here it is.

My code is below – there are still a couple of abandoned shapes in there, examples of things I tried that didn’t work. And there’s a very inelegant (since it repeats almost the entire code) odd-interval blinking function that I couldn’t resist throwing in.

function setup() {

createCanvas (460, 600);

}

function draw() {

background(150);

if ((second() % 5 <= 0) && (abs(sin(millis()/1000)) <= 1/4)) {

//left hair 1

fill(50);

noStroke();

translate(230, 260);

quad(-40, 200, -80, -60, -127, -60, -120, 200);

//right hair 1

fill(50);

noStroke();

quad(40, 200, 80, -60, 122, -64, 115, 200);

// face

fill(220);

stroke(0);

strokeWeight(3);

ellipse(0, 0, 214, 260);

//left temple

fill(0);

noStroke();

translate(0, 5);

quad(-115, -45, -70, -45, -70, -5, -115, -35);

//right temple

fill(0);

noStroke();

quad(115, -45, 70, -45, 70, -5, 115, -35);

//nose bridge

noFill();

stroke(0);

strokeWeight(5);

arc(0, -13, 65, 57, PI+QUARTER_PI, -QUARTER_PI);

//bottom of left lens

fill(240);

stroke(0);

strokeWeight(3);

arc(-55, -59, 90, 135, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//top of left lens

fill(240);

stroke(0);

strokeWeight(3);

arc(-55, -23, 100, 60, PI+QUARTER_PI / 2, 2 * PI-(QUARTER_PI/2), OPEN);

//bottom of right lens

fill(240);

stroke(0);

strokeWeight(3);

arc(55, -59, 90, 135, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//top of right lens

fill(240);

stroke(0);

strokeWeight(3);

arc(55, -23, 100, 60, PI+QUARTER_PI / 2, 2 * PI-(QUARTER_PI/2), OPEN);

//bottom of left eye

noFill();

stroke(0);

strokeWeight(1);

arc(-55, -38, 60, 47, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//bottom of right eye

noFill();

stroke(0);

strokeWeight(1);

arc(55, -38, 60, 47, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//bridge of nose

noFill();

stroke(160);

translate(0, 2);

strokeWeight(2);

arc(57, -27, 100, 130, HALF_PI+QUARTER_PI+QUARTER_PI/6, PI);

//left nostril

//fill(220);

//stroke(120);

//strokeWeight(2);

//arc(-20, 22, 30, 30, HALF_PI, PI, OPEN);

//right nostril

//fill(220);

//stroke(120);

//strokeWeight(2);

//arc(20, 22, 30, 30, 0, PI-HALF_PI, OPEN);

//center of nose

fill(220);

stroke(120);

strokeWeight(2);

arc(0, 18, 50, 50, QUARTER_PI/2, PI-QUARTER_PI/2, OPEN);

//mouth

noFill();

stroke(100);

strokeWeight(2);

arc(0, 15, 170, 110, QUARTER_PI, PI-QUARTER_PI, OPEN);

//lower lip

noFill;

stroke(160);

strokeWeight(1);

arc(0, 30, 170, 100, HALF_PI-QUARTER_PI/3, HALF_PI+QUARTER_PI/3, OPEN);

//left smile

noFill;

stroke(180);

strokeWeight(1);

arc(0, 55, 110, 50, PI-QUARTER_PI/3, PI, OPEN);

//right smile

noFill;

stroke(180);

strokeWeight(1);

arc(0, 55, 110, 50, 0, QUARTER_PI/3, OPEN);

//left hair

fill(50);

noStroke();

translate(-52, -108);

rotate(PI/3.35);

ellipse(0, 0, 70, 180);

translate(72, -97);

//right hair

fill(50);

noStroke();

rotate(-2*PI/3.5);

ellipse(0, 0, 50, 130);

}

else {

//left hair 1

fill(50);

noStroke();

translate(230, 260);

quad(-40, 200, -80, -60, -127, -60, -120, 200)

//right hair 1

fill(50);

noStroke();

quad(40, 200, 80, -60, 122, -64, 115, 200)

// face

fill(220);

stroke(0);

strokeWeight(3);

ellipse(0, 0, 214, 260);

//left temple

fill(0);

noStroke();

translate(0, 5);

quad(-115, -45, -70, -45, -70, -5, -115, -35);

//right temple

fill(0);

noStroke();

quad(115, -45, 70, -45, 70, -5, 115, -35);

//nose bridge

noFill();

stroke(0);

strokeWeight(5);

arc(0, -13, 65, 57, PI+QUARTER_PI, -QUARTER_PI);

//bottom of left lens

fill(240);

stroke(0);

strokeWeight(3);

arc(-55, -59, 90, 135, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//top of left lens

fill(240);

stroke(0);

strokeWeight(3);

arc(-55, -23, 100, 60, PI+QUARTER_PI / 2, 2 * PI-(QUARTER_PI/2), OPEN);

//bottom of right lens

fill(240);

stroke(0);

strokeWeight(3);

arc(55, -59, 90, 135, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//top of right lens

fill(240);

stroke(0);

strokeWeight(3);

arc(55, -23, 100, 60, PI+QUARTER_PI / 2, 2 * PI-(QUARTER_PI/2), OPEN);

//top of left eye

fill(255);

stroke(0);

strokeWeight(1);

arc(-55, -15, 60, 47, PI+QUARTER_PI / 2, 2 * PI-(QUARTER_PI/2), OPEN);

//bottom of left eye

fill(255);

stroke(0);

strokeWeight(1);

arc(-55, -38, 60, 47, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//center of left eye

fill(0);

noStroke();

ellipse(-55, -26, 20, 20);

//top of right eye

fill(255);

stroke(0);

strokeWeight(1);

arc(55, -15, 60, 47, PI+QUARTER_PI / 2, 2 * PI-(QUARTER_PI/2), OPEN);

//bottom of right eye

fill(255);

stroke(0);

strokeWeight(1);

arc(55, -38, 60, 47, QUARTER_PI / 2, PI-(QUARTER_PI/2), OPEN);

//center of right eye

fill(0);

noStroke();

ellipse(55, -26, 20, 20);

//bridge of nose

noFill();

stroke(160);

translate(0, 2);

strokeWeight(2);

arc(57, -27, 100, 130, HALF_PI+QUARTER_PI+QUARTER_PI/6, PI);

//left nostril

//fill(220);

//stroke(120);

//strokeWeight(2);

//arc(-20, 22, 30, 30, HALF_PI, PI, OPEN);

//right nostril

//fill(220);

//stroke(120);

//strokeWeight(2);

//arc(20, 22, 30, 30, 0, PI-HALF_PI, OPEN);

//center of nose

fill(220);

stroke(120);

strokeWeight(2);

arc(0, 18, 50, 50, QUARTER_PI/2, PI-QUARTER_PI/2, OPEN);

//mouth

noFill();

stroke(100);

strokeWeight(2);

arc(0, 15, 170, 110, QUARTER_PI, PI-QUARTER_PI, OPEN);

//lower lip

noFill;

stroke(160);

strokeWeight(1);

arc(0, 30, 170, 100, HALF_PI-QUARTER_PI/3, HALF_PI+QUARTER_PI/3, OPEN);

//left smile

noFill;

stroke(180);

strokeWeight(1);

arc(0, 55, 110, 50, PI-QUARTER_PI/3, PI, OPEN);

//right smile

noFill;

stroke(180);

strokeWeight(1);

arc(0, 55, 110, 50, 0, QUARTER_PI/3, OPEN);

//left hair

fill(50);

noStroke();

translate(-52, -108);

rotate(PI/3.35);

ellipse(0, 0, 70, 180);

translate(72, -97);

//right hair

fill(50);

noStroke();

rotate(-2*PI/3.5);

ellipse(0, 0, 50, 130);

}